👋 About Me

I’m currently a 2nd-year PhD student at Tsinghua University Shenzhen International Graduate School, supervised by Prof. Yansong Tang and Prof. Jiwen Lu. I got my bachelor’s degree from the Department of Automation, Tsinghua University, in 2023.

My research interests lie in Computer Vision, such as Video Generation, Video Understanding.

✨ News

- 2025-09: Our work on Image Generation (SRPO) is currently ranked #1 on HF Trending. SRPO

- 2025-06: One paper on Image Editing is accepted to ICCV 2025

- 2025-04: One paper on Controllable Video Generation is accepted to SIGGRAPH 2025

- 2024-07: One paper on Embodied Vision is accepted to ECCV 2024

- 2024-03: One paper on video understanding (Narrative Action Evaluation) is accepted to CVPR 2024

- 2023-03: One paper on video understanding (Action Quality Assessment) is accepted to CVPR 2023

🔬 Publications and Manuscripts

- indicates equal contribution

| Xiangwei Shen, Zhimin Li, Zhantao Yang, Shiyi Zhang, Yingfang Zhang, Donghao Li, Chunyu Wang, Qinglin Lu, Yansong Tang Preprint, 2025 [PDF] [Project Page] SRPO can effectively restore highly noisy images, leading to an optimization process that is more stable and less computationally demanding. |

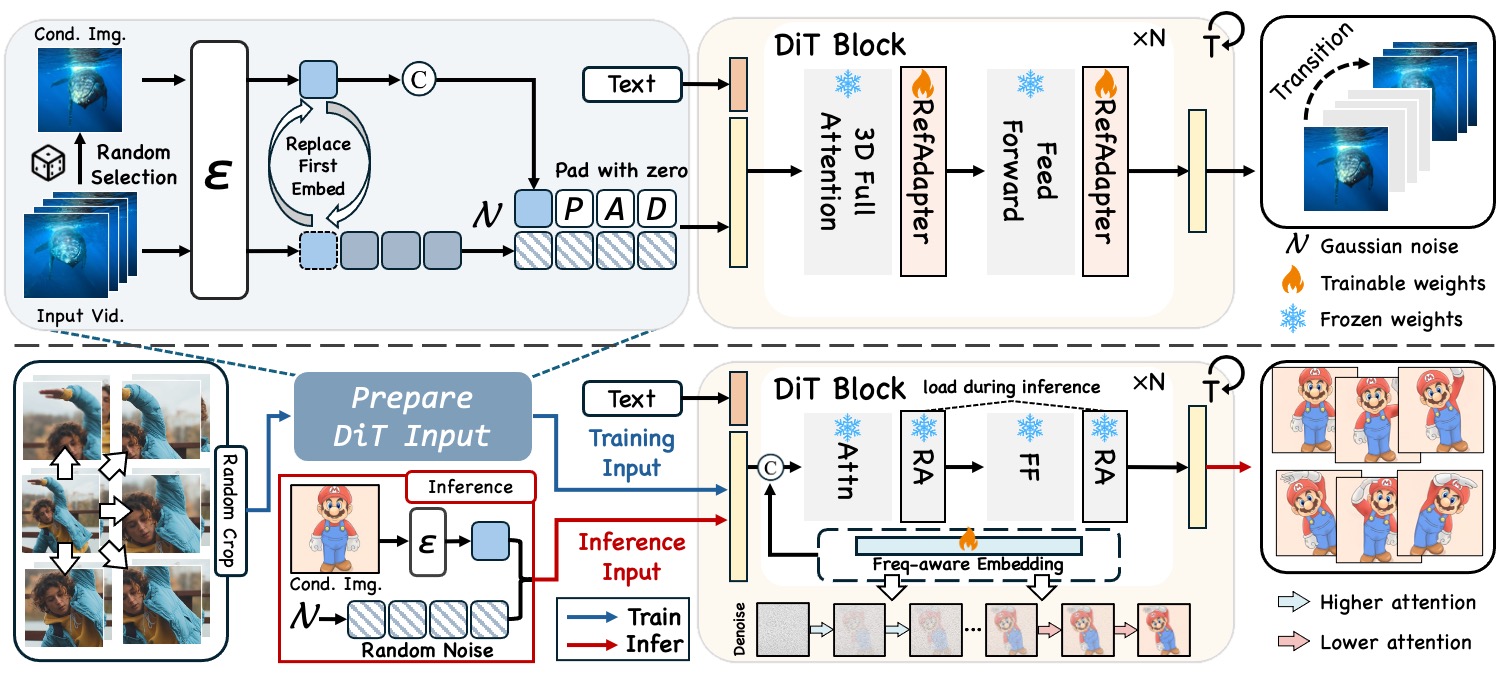

| Shiyi Zhang*, Junhao Zhuang*, Zhaoyang Zhang, Yansong Tang ACM SIGGRAPH (SIGGRAPH), 2025 [PDF] [Project Page] We achieve action transfer in heterogeneous scenarios with varying spatial structures or cross-domain subjects. |

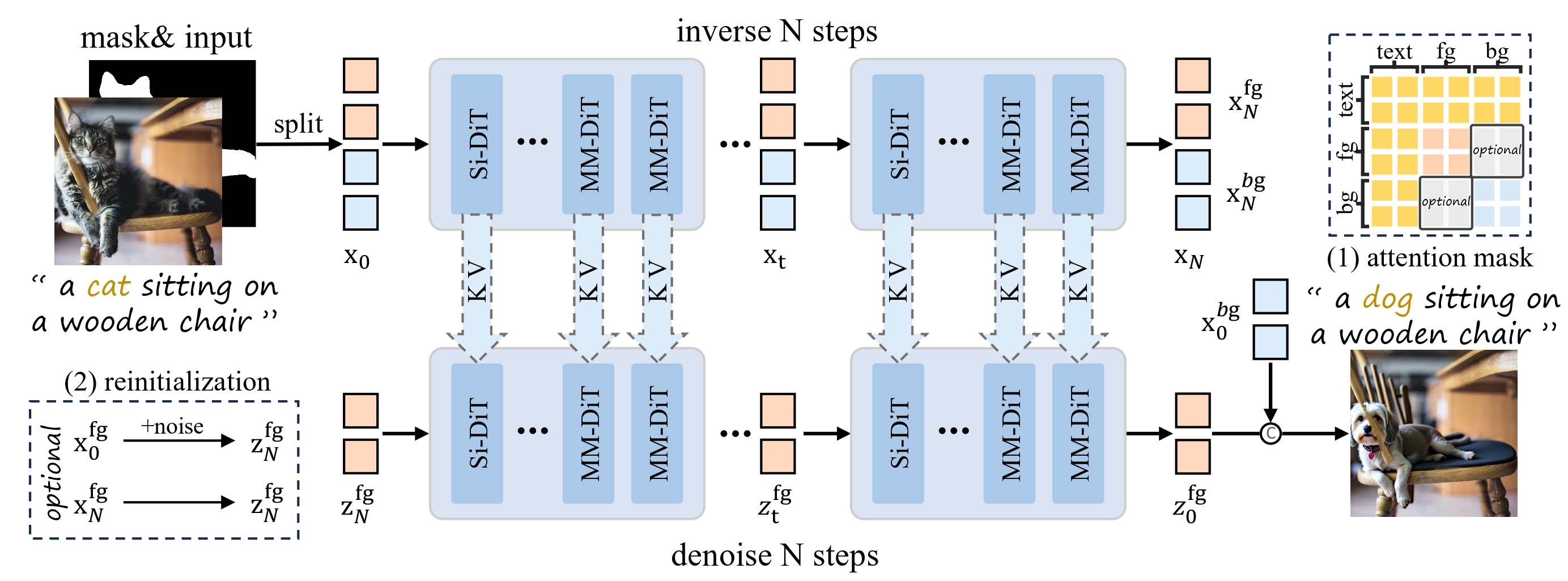

| Tianrui Zhu*, Shiyi Zhang*, Jiawei Shao, Yansong Tang International Conference on Computer Vision (ICCV), 2025 [PDF] [Project Page] We propose KV-Edit to address the challenge of background preservation in image editing by preserving the key-value pairs of the background, which effectively handles common semantic editing. |

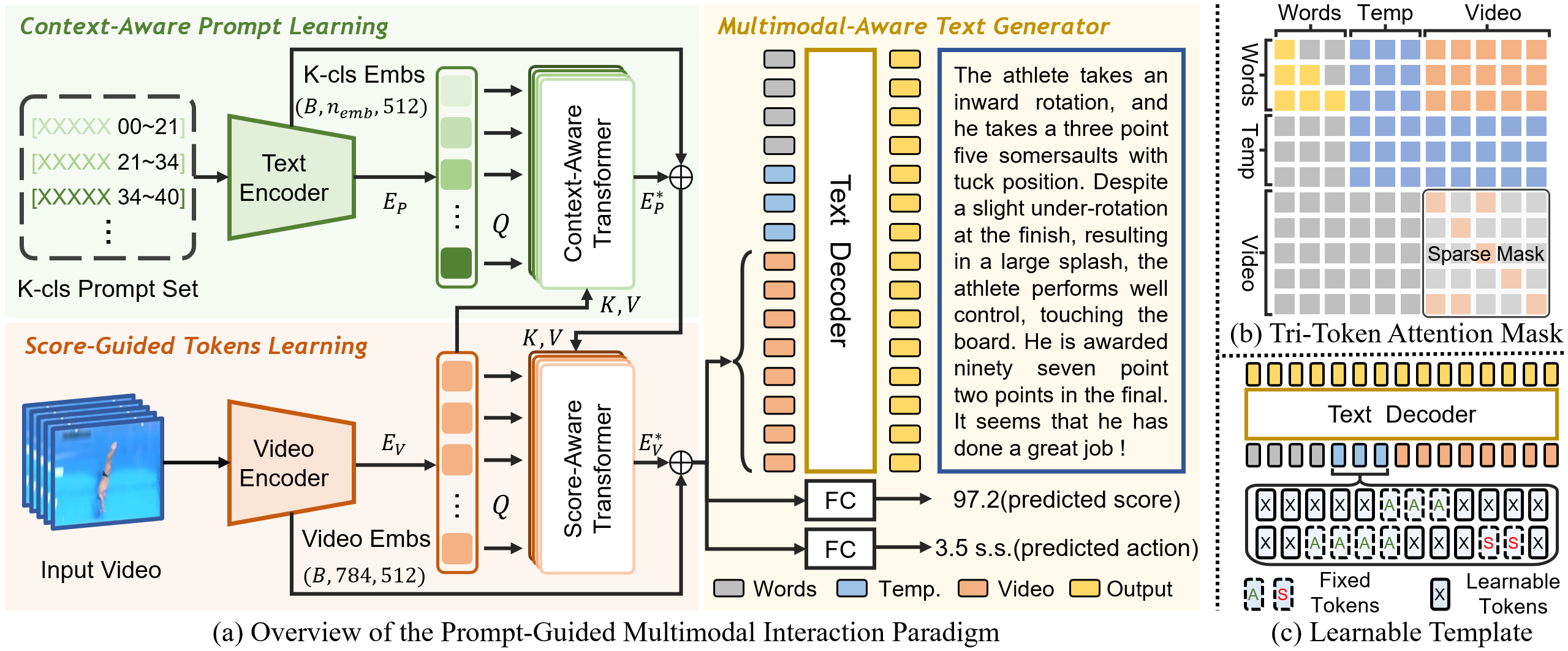

| Shiyi Zhang*, Sule Bai*, Guangyi Chen, Lei Chen, Jiwen Lu, Junle Wang, Yansong Tang IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2024 [PDF] [Project Page] We investigate a new problem called narrative action evaluation (NAE) and propose a prompt-guided multimodal interaction framework. |

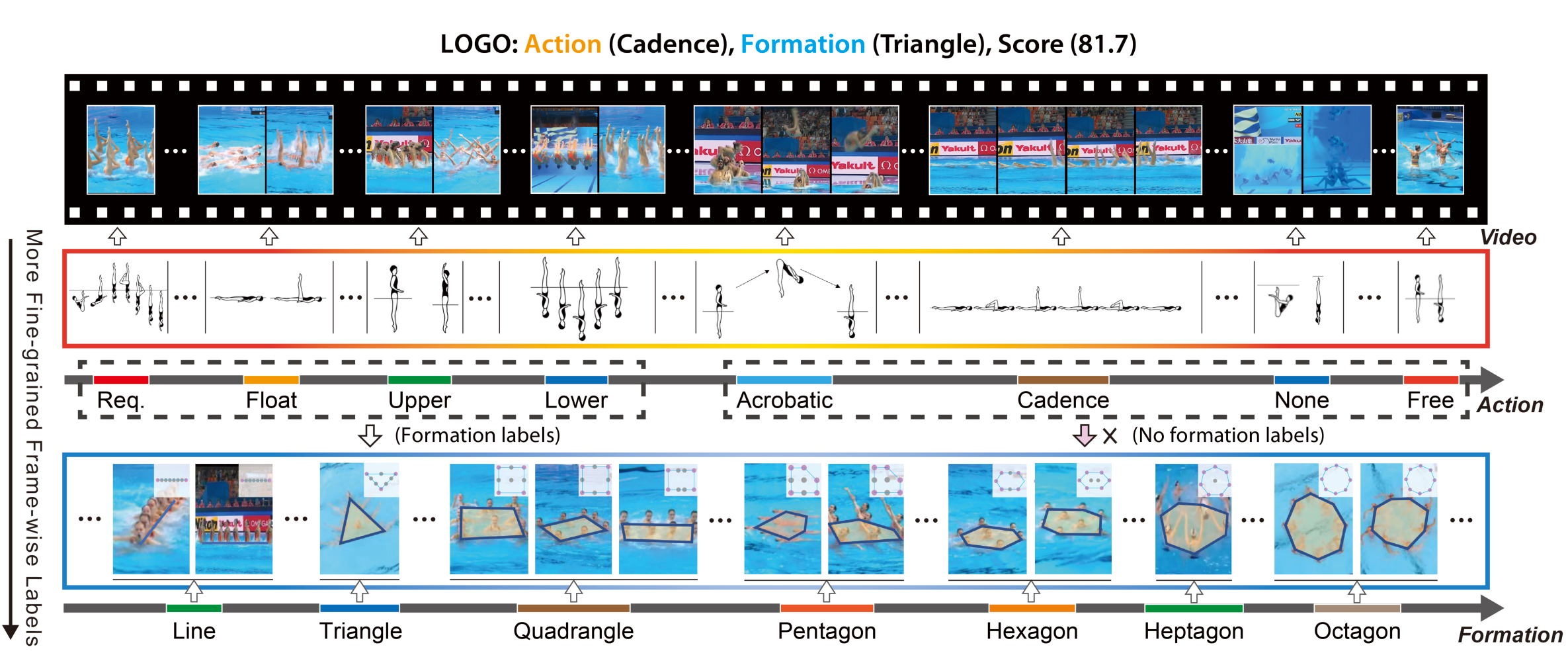

| Shiyi Zhang, Wenxun Dai, Sujia Wang, Xiangwei Shen, Jiwen Lu, Jie Zhou, Yansong Tang IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2023 [PDF] [Project Page] LOGO is a new multi-person long-form video dataset for action quality assessment. |

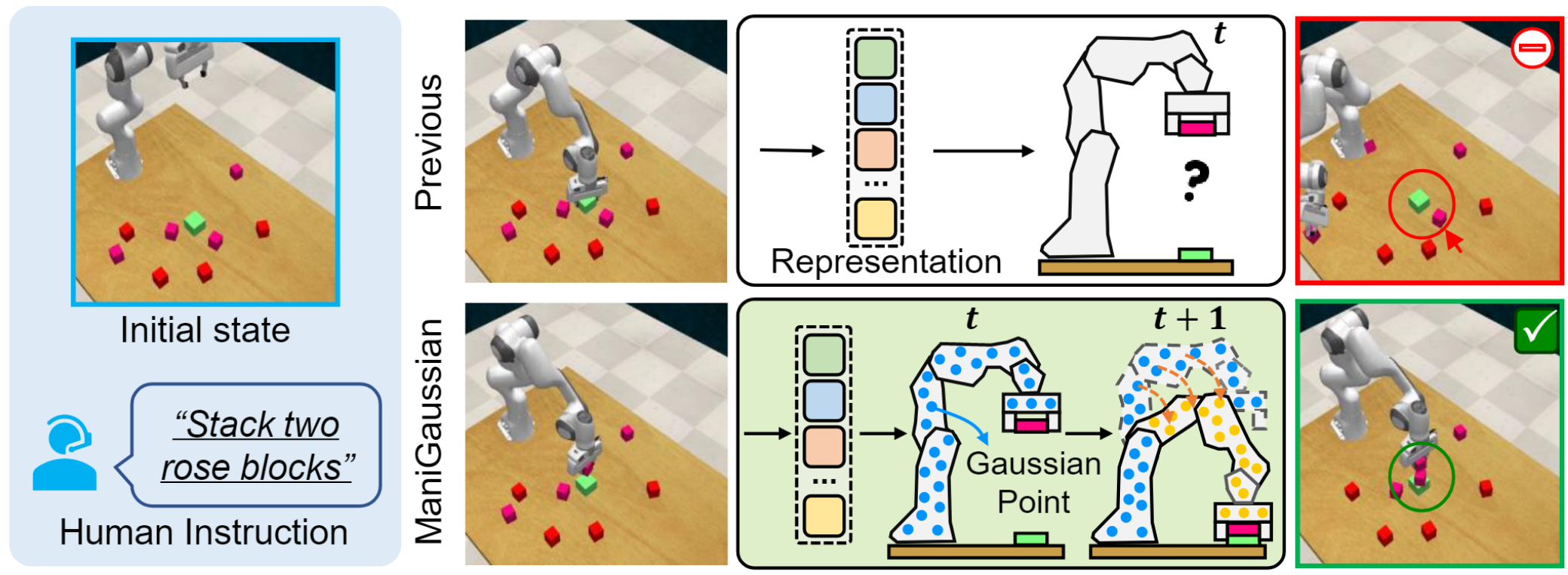

| Guanxing Lu, Shiyi Zhang, Ziwei Wang, Changliu Liu, Jiwen Lu and Yansong Tang. European Conference on Computer Vision (ECCV), 2024 [PDF] [Project Page] We propose a dynamic Gaussian Splatting method named ManiGaussian for multi-task robotic manipulation, which mines scene dynamics via future scene reconstruction. |